Raid arrays on Debian

I’ve been wondering for awhile now about how to configure my server when it comes to raid arrays. There’s all kinds of different configurations out there, raid levels 0,1,5, 10 or some combination of these seem to be most popular. There’s also software or hardware raid.

I’ve decided to take one of my servers and benchmark 2 different raid arrays on them.

After googling around and researching different raid configurations, I decided that either raid 5 or raid 10 would be my best bet. So these 2 setups would be benchmarked against each other to help me decide which one to use.

Since the server in use doesn’t have a hardware raid controller, software raid was the only real option. There was onboard hardware raid, but I think its just a fake raid anyway. (Bascially a software raid)

Since Linux’s mdadm tool provides very powerful raid configuration, I’ll be happy to just use it instead of the onboard software raid.

Background on Raid 5 and Raid 10

Raid 0: Stripe of 2 or more disks

Raid 1: Mirror of 2 or more disks

Raid 5: Stripe of disks with parity for redundancy. Requires 3 or more disks.

Raid 10: Combination of 2 raid 1 arrays put together to make a final raid 0 array.

You can read up on more info about different raid configurations below.

Background on server

The server is mainly a web, email, proxy and file server. There will be lots of writes and reads hitting the disk. Server runs a network with about 60 workstations on it.

The server will be running Debian Sarge on a x86 architecture with a 2.6.16 kernel.

Contents of box:

Intel Pentium 4 3.6 Ghz (HT turned off)

Gigabyte 955x Royal Motherboard

2 Gig Memory

4 Sata WD 320 Gig Hard Drives

The motherboard in this box has 6 sata controllers, 4 from intel using an ich7 controller and 2 from silicon. I use the 2 silicon controllers to host backup hard disks, and run the main system on the 4 intel controllers.

Because of heat, and the design of the case, only 6 drives really work in this system. I supposed I could have purchased a sata card, (or a sata raid card) to get more drives, but for the ease of setup and budget, 6 drives was enough to setup the box.

Okay, so I have 4 disks to play with. I could use raid 5 or raid 10. But which one?

Well, raid 5 will give alot more disk space, about 75% of total disk space. Raid 10 however only gives 50% of total disk space. So right away, Raid 5 is winning without doing any bechmarks. However, with raid 5, if more than one disk fails at the same time, all data is lost. Raid 10 can handle multiple disk failures, but its not perfect. As long as the disks in the raid 1 arrays don’t fail at the same time, everything is okay. So raid 10 has a little better redundancy than raid 5, but less total storage.

Raid 5 also should have faster reads, and potentially faster writes than raid 10. Why? Because I’m building a raid 5 array from 4 disks. That should allow for 3 disks to stripe the data. The only penalty will come when the parity needs to read or written to.

The raid 10 is just a straight stipe or mirror and so no parity is used. This will give a huge advantage to raid 10. But wait, in my setup, I only have 4 disks, therefore only 2 disks (actually 2 mirrors) will be used for stripe. Although there is less disks, there will be no parity penalty, so this is why I want to test the raid 5 and raid 10 with some benchmarks.

I’m not only going to run simple benchmark programs and record the results. I’m going to run the bechmarks first just by themselves, and then again later while the system is under load.

Since this server will be performing normal tasks to run a network, I’m more interested in performance vs total space or redundancy. Although redundancy is still important, performance out of the raid array is really what I’m looking for.

One could also argue differences between build times or performance during a degraded array. This isn’t too much of a concern for me since I predict that the server won’t spend too much time of its life in a degraded state. (Remember, I’m running Debian, these boxes don’t like to be down

So again, I’m really only concerned about actual every day performance.

So, I have my server built and the system booted. Lets get to the benchmarks.

When I installed Sarge, I left some partitions free to create the raid arrays. I can use the tool mdadm to create any array I want from disk partitions.

I kept the partitions small so that build times wouldn’t take forever. The raid 5 array is created from 4 80 gig partitions. The raid 10 was created from the same 4 80 gig partitions. I started with raid 5, created the array, formatted it and mounted it.

After benchmarking the raid 5, I disasembled it and then went on to create the raid 10 array. I mounted it, and then performed the same tests.

The Tests

I ran 3 tests, each test with and without load.

Also, each individual test was run 3 times and I averaged the results.

The 3 tests were:

# bonnie -u root -f

# iozone -s 4020000 -t 1 -i 1 -i 0

# tiobench

I then performed the above tests again except with a stress program running in the background. The stress command was

# stress –cpu 8 –io 4 -d 2

The results

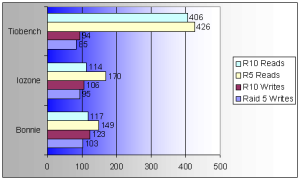

SW Raid 10 vs Raid 5 (Without Server Load)

All output is in MB/sec

[higher is better]

Note: I’m not sure why the tiobench read results are so high. Perhaps some of the results were being pulled from memory, and not from disk. Anyway, Raid 10 and Raid 5 showed similar results, so I’m just going with it.

SW raid 10 vs Raid 5 (With Server Load)

[Higher is better]

Conclusion

Even though the raid 5 has higher reads without load in some cases, Raid 10 seems to have better writes and better overall performance during load.

It would be interesting to see the same tests but on a hardware raid as raid 5 wouldn’t have too much CPU time to calculate the parity.

Since disk space isn’t too important for me, I’ll be going with the raid 10. I like speed, and lots of it.

In the future, I’m also going to be looking at using XFS filesystems over EXT3. Apparently XFS has been around since the early 90’s but has just been ported to Linux since Kernel 2.4. It has the potential to be more robust holding netatalk files and may be a little quicker as well.